Artificial Intelligence, real consequences. Cybercriminals are turning to AI voice cloning in a wave of sophisticated scams

Words by Karan Karayi

It might talk like a duck, or quack like a duck, but it might well not be a feathered friend. Daffy though it might sound, welcome to the dark side of Artificial Intelligence (AI), where three seconds of audio is all it takes to pull off a heist, thanks to AI voice cloning tools. Using a small audio sample, they can clone the voice of nearly anyone and send bogus voice messages or make phoney calls for help that trick people into parting with their hard-earned money.

Take the cautionary tale of Rekha Vasudevan. Like any doting grandparent, when she got a call from her grandson Kirthan saying he was travelling and had run out of money, with his wallet and cellphone stolen, and needed cash, she scrambled to do whatever she could to help. She and her husband raced to the bank to withdraw the bail money, when a bank manager sat them down and explained another customer had received a similar call, and it was perhaps all part of an elaborate hoax designed to extract money from unsuspecting victims.

That’s when the penny dropped for the septuagenerian, who until then was convinced the person on the other end of the line was her grandson.

We haven’t seen the last of schemes such as these, powered by generative AI, especially as they become increasingly sophisticated, and it becomes increasingly difficult to distinguish between a human being and a machine. The mad sprint to win the race for generative AI has opened something of a Pandora’s Box, causing the Godfather of AI, Geoffrey Hinton to wryly remark, “The good news is, we have discovered the secret of immortality. The bad news is, it’s not for us.”

What’s worse is that as AI becomes increasingly intelligent, will it outpace human intelligence? And are we ready to tackle its dark side?

Modus operandi

Simply, the nefarious are weaponising AI to prey on the weak. With technology that’s easy to access for a negligible fee, an audio sample of a few sentences is enough to translate an audio file into a replica of a voice, allowing a user to “say” whatever is typed into the interface. These applications are not new, but generative AI has given them a shot in the arm, and they have improved greatly. Where you needed a lot of audio to clone a voice as little as a year ago, a simple 30-second audio clip taken from Instagram, Facebook, podcasts, vlogs, or TikTok will be enough to create a digital replica of your voice.

Take the case of companies such as ElevenLabs, an AI voice synthesizing start-up founded in 2022. It burst into the news when its tool was used to replicate the voices of celebrities saying things they never did, such as Emma Watson falsely reciting passages from Adolf Hitler’s “Mein Kampf.” ElevenLabs understood the severity of its misuse, and said it’s incorporating safeguards to stem misuse, including banning free users from creating custom voices and launching a tool to detect AI-generated audio. But it might be too little too late, for the horse has bolted the stable.

Given the recent rise of this threat, law enforcement is ill-equipped to combat it. With no leads to identify the bad actors perpetuating this scam, the police cannot trace calls or the digital trail of money. And with no precedent for a crime like this, courts are left in the unenviable position of holding companies accountable and trying to ascertain the quantum of punishment for something that has never been committed before. It’s the perfect storm for the perfect crime.

It preys on human emotion too; who wouldn’t respond to a distress call from a loved one? This is technology tugging on our heart strings, with the life-like, uncannily voice replicas completing the ruse. All it takes is an AI voice-generating software that analyses what makes any voice unique — including age, gender and accent — and combing through its database to find similar voices, which enables it to re-create the pitch, timbre, and individual sounds of a person’s that is eerily accurate. It’s organised chaos at its finest.

For criminals, the possibilities for flouting privacy, stealing identities, and spreading misinformation are massive. It’s why regulators must act swiftly to nip AI that could allow deception such as this in the bud. If we don’t crack down on fraudsters now, it might be too late to do so later.

Indians: An easy target

If you thought that India is safe from these crimes, think again. A recent study by McAfee, titled ‘The Artificial Imposter’, revealed that India is the country with the highest number of victims, with 83% of have reported monetary losses in AI voice scams, with nearly half of them (48%) losing more than ₹50,000.

Worryingly, nearly half (47%) of Indian adults have either been a victim of or know someone who has fallen prey to some form of AI voice scam. This percentage is almost twice the global average (25%).

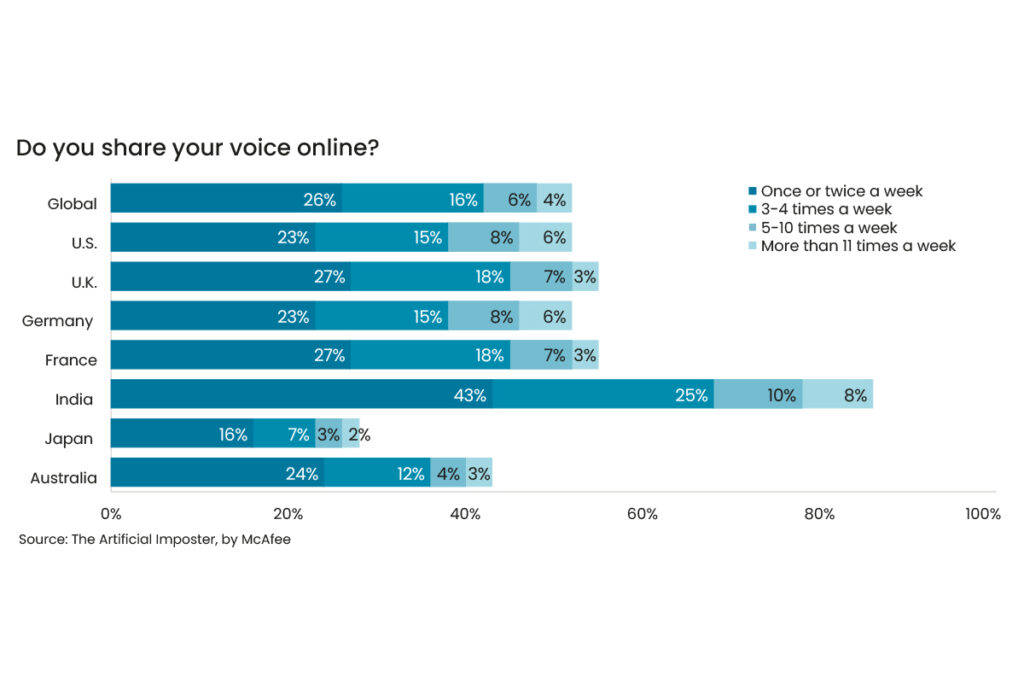

Voices are often distinctive, which is why they can be taken at face value (so to speak). But with 86% of Indian adults sharing their voice data online or through recorded notes at least once a week (on social media, voice notes, etc.), this makes them a sitting duck for cybercriminals looking to clone their voice. What’s more, 69% of Indians are unable to distinguish between a genuine human voice and an AI-generated voice, and 66% of Indian survey participants admitted that they would respond to a voicemail or voice note that appears to be from a friend or family member in urgent need of money, especially if it supposedly originated from their parent (46%), partner/spouse (34%), or child (12%).

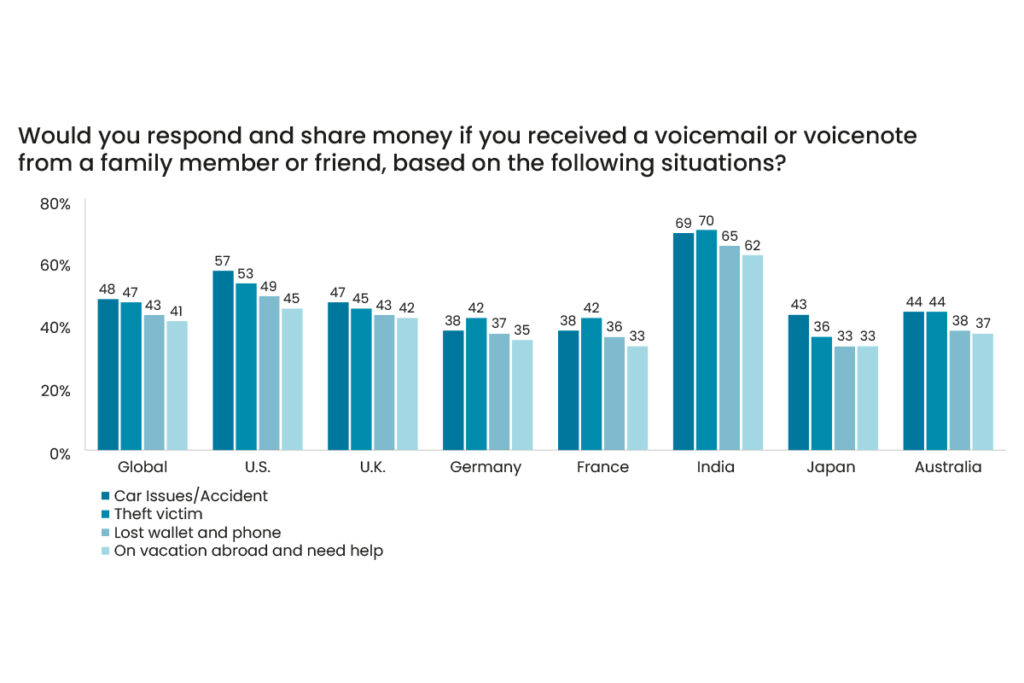

The report further revealed that messages that claim the sender was robbed (70%), involved in a car accident (69%), lost their phone or wallet (65%), or required assistance while travelling overseas (62%) were most likely to provoke a response.

All is not lost though; 27% of Indian adults have lost faith in social media platforms, while 43% are apprehensive about the growing prevalence of disinformation or misinformation.

In the words of the host from a popular TV show, “Satark rahe. Savdhan rahe, Kyuki jurm aapki chaukhat pe dastak de sakta hai.”

Attack of the clones

Alarming though this turn of events are, one cannot simply eliminate AI-assisted voice cloning because of a few bad actors. For all the wolves in sheep’s clothing and ethical concerns, think of the positives; it could be used to help those that have lost their voice to regain a close facsimile of it, or be used for more human-like virtual assistants, or to churn out voiceover work for video and audio in a rapid manner.

The challenge is to know when an AI voice is mimicking a loved one, and that might be easier said than done, as our ears might trick us at an inopportune time. The machines are coming, and we simply need to dig deeper into that well of human instinct and exercise caution if we feel the voice on the other end of the line is not as it seems to be. Let your gut feel be the voice of reason, even as scammers try to win the race to pilfer your pocket.