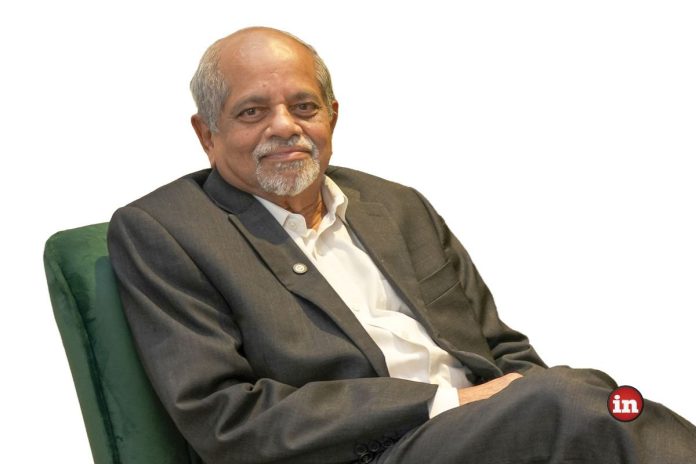

In the rush to deploy Artificial Intelligence, the greatest risk isn’t technical failure—it is ethical collapse. Ashank Desai, Principal Founder and Chairman of Mastek, outlines why the “conscience” of an algorithm is now a critical business asset.

For the last decade, the conversation around Artificial Intelligence has been dominated by capability: What can the machine do? Today, as AI weaves itself into the nervous system of global enterprise, the question has shifted to liability: What will the machine destroy if we aren’t careful?

Ashank Desai, a veteran of the Indian IT industry and a founding member of NASSCOM, sees the landscape not just as a technologist, but as a custodian of corporate governance. In a recent discussion, he warned that the “rush to release” is creating a taxonomy of risks that the C-suite is often ill-equipped to handle.

The Taxonomy of Risk

Desai argues that the danger of inequitable AI—systems that lack fairness, transparency, or inclusivity—manifests in four distinct ways. The first is reputational. In an era where brand value is tied to social values, a biased algorithm is a PR catastrophe waiting to happen. “People will say you are racist or against a certain community,” Desai notes, highlighting that data which isn’t “completely representative” can backfire spectacularly.

The second is regulatory. With the European Union’s AI Act and emerging global frameworks, non-compliance is no longer just a faux pas; it is a legal exposure. The third is strategic misalignment—the inefficiency born of decisions made by “black box” algorithms that leaders cannot explain or justify.

But it is the fourth risk that Desai frames as the most existential: societal inequality. “You have people who know AI and those who don’t know AI. Those who are better off know how to use AI… urban areas know how to use AI, rural don’t,” he observes. This digital bifurcation creates a world of “haves and have-nots,” deepening cultural divisions and risking a backlash that could stifle innovation itself.

The Shared Burden of Ethics

When an AI model discriminates, who is to blame: the builder or the user? Desai rejects the binary. “The responsibility lies with both,” he asserts.

For the builder (such as companies like Mastek) the mandate is ethical governance. This means aligning with rigorous global standards like ISO 42001, NIST AI standards, and more. It involves “privacy impact assessments” and ensuring that client data is never used for model training without “explicit consent.” Mastek has institutionalized this through its AI Engineering Center of Excellence, which integrates legal compliance directly into the code.

However, the client cannot abdicate responsibility. They must ensure the solution is deployed in the right context. “They (organisations) need to do oversight…to see we are working on the right track. We don’t know your organization as much as you do,” Desai reminds leaders. Without constant client oversight, review, and audit, even a well-engineered tool can become a dangerous weapon.

And of course, none of this would be possible without an abundance of high-quality data. “What we need is good, clean representative data, and that is most certainly the customer’s issue”

Diversity: Necessary, But Not Sufficient

A common panacea offered for AI bias is “diversity.” While Desai agrees that diverse teams are “87% more effective” due to the variance in perspective they bring as outlined in reports, he cautions against treating it as a silver bullet. “Just diversity won’t make it happen,” he argues. “You need good governance.”

A diverse team might spot a problem, but only a robust “data pipeline, without flaws” and a mature framework with ethical oversight can fix it. Sensitivity and empathy must be operationalized through tools that detect bias in labeling and correction. Diversity provides the conscience; governance provides the guardrails.

India as the Standard-Bearer

Looking outward, Desai sees a pivotal role for the Indian IT sector. “With over 500,000 AI-skilled professionals, India is no longer just the world’s back office; it is its laboratory.” But scale is not enough. Desai urges the industry to “champion multi-stakeholder governance,” working through bodies like NASSCOM to create frameworks for policy similar to the “Responsible AI resource kit.”

By learning from global associations and promoting world-class certifications, Indian IT can set the benchmark for IT management and governance and trustworthy AI. In doing so, it can ensure that the technology remains a bridge to a better future, rather than a wedge that drives us apart.